You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.įor our purposes, we care about the “lx” column, which starts with 100,000 babies born (at age 0) and shows how many are still alive at a given age.

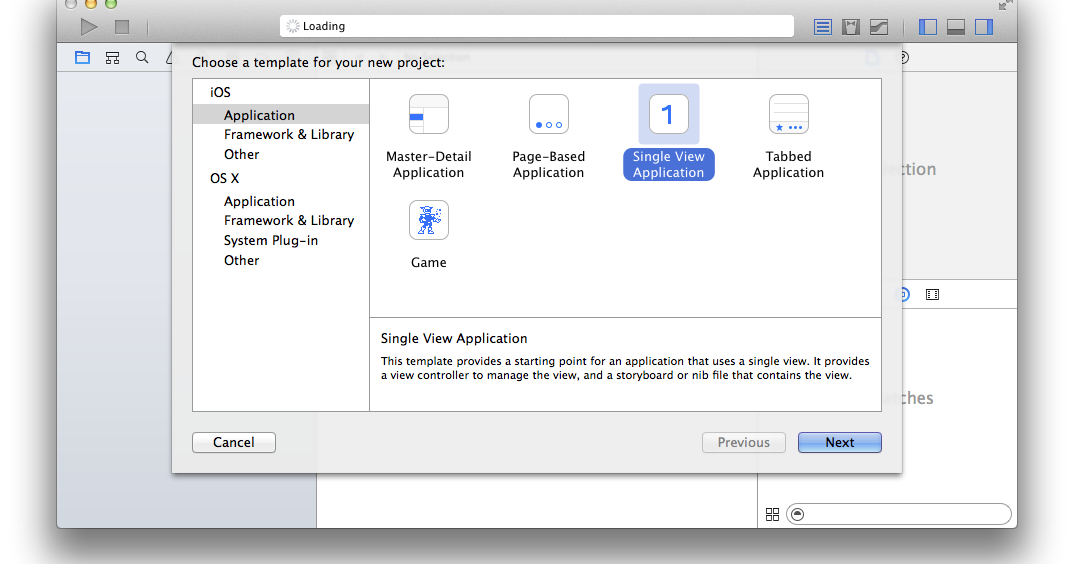

SWIFT WEBSCRAPER HOW TO

Discover how to animate your React app with AnimXYZ.Switch between multiple versions of Node.Use React's useEffect to optimize your application's performance.Learn how LogRocket's Galileo cuts through the noise to proactively resolve issues in your app.

Don't miss a moment with The Replay, a curated newsletter from LogRocket.The various other columns show different statistics about survival rates at that age. Each row of the table represents a different age (that’s the “x” column). The table is split into two parts, male and female. The SSA provides a much more comprehensive explanation of these life tables, but we don’t need to read through the entire study for this article. The page we are using lists, for people born in 1900, their chances of surviving to various ages. This data is available in “life tables” found on various pages of the SSA website. In this example, we are going to gather life expectancy data from the Social Security Administration (SSA). Now, let’s look at an example of web scraping with Rust! Building a web scraper with Rust It’s also helpful to include reasonable error messages to make it easier to track down what invariant has been violated when a problem occurs. After parsing the page, we’ve gotten at least 50 rows of data.The values are decreasing (we know to expect this because of the specifics of the data we’re looking at).The values are all between 0 and 100,000.If a row has any of the headers that we’re looking for, then it has all three of the ones we expect.In the example below, some of the things we validate include: Exactly what you validate will be pretty specific to the page you are scraping and the application you are using to do so. Validate, validate, validate!Īnother way to guard against unexpected page changes is to validate as much as you can. In the example we’ll look at below, to find the main data table, we find the table element that has the most rows, which should be stable even if the page changes significantly.

It’s better to try to find something more robust that seems like it won’t change. But this is very fragile if the HTML document page changes even a tiny bit, the seventh p element could easily be something different. One option is to do something like finding the seventh p element in the document. Aim for robust web scraper solutionsĪs we’ll see in the example, a lot of the HTML out there is not designed to be read by humans, so it can be a bit tricky to figure out how to locate the data to extract. The example we’ll look at later on in this article has a 500ms delay between requests, which should be plenty of time to not overwhelm the web server. The best way to avoid this is to put a small delay in between requests. This is considered rude, as it might swamp smaller web servers and make it hard for them to respond to requests from other clients.Īlso, it might considered a denial-of-service (DoS) attack, and it’s possible your IP address could be blocked, either manually or automatically! When writing a web scraper, it’s easy to accidentally make a bunch of web requests quickly. Be a good citizen when writing a web scraper Let’s go over some general principles of web scraping that are good to follow. The details of web scraping highly depend on the page you’re getting the data from. Web scraping can be a bit of a last resort because it can be cumbersome and brittle. If the data you want is available in another way - either through some sort of API call, or in a structured format like JSON, XML, or CSV - it will almost certainly be easier to get it that way instead. HTML isn’t a very structured format, so you usually have to dig around a bit to find the relevant parts. However, web scraping can be pretty tricky. If you can load a page in a web browser, you can load it into a script and parse the parts you need out of it! Web scraping refers to gathering data from a webpage in an automated way. Parsing the HTML with the Rust scraper crate.Fetching the page with the Rust reqwest crate.Be a good citizen when writing a web scraper.We’ll also look at what tools Rust has to make writing a web scraper easier. In this article, we’re going to explore some principles to keep in mind when writing a web scraper. Web scraping is a tricky but necessary part of some applications.

He enjoys working on projects in his spare time and enjoys writing about them! Web scraping with Rust

SWIFT WEBSCRAPER SOFTWARE

Greg Stoll Follow Greg is a software engineer with over 20 years of experience in the industry.

0 kommentar(er)

0 kommentar(er)